Have we reached Peak AI-Hype?

If you’ve been in the IT/Software business long enough, you realize that the cycle of hype and disillusionment is an industry reality which manifests itself every few years. Every substantive (and some not-so substantive) emerging technology will eventually succumb to the hype cycle and predictably suffer from the downturn as reality inevitably fails to meet up with overblown expectations.

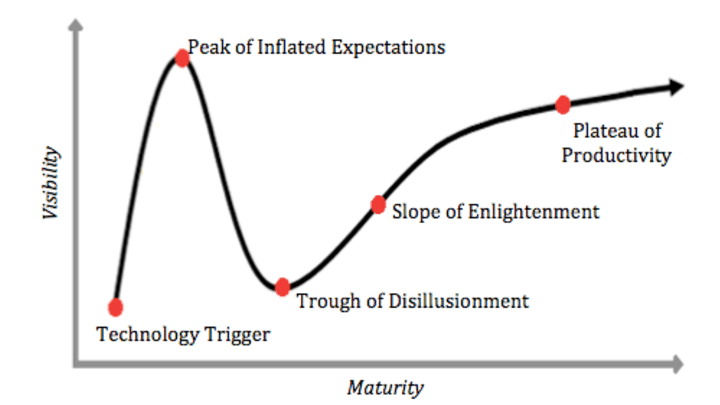

The hype cycle consists of five distinct stages of technological development and market adoption:

- Technology trigger

- Peak of inflated expectations

- Trough of disillusionment

- Slope of enlightenment

- Plateau of productivity

As the name suggests, at the peak of inflated expectations, the technology is severely overhyped and expected to dramatically improve the world while in reality, the benefits it delivers are nowhere close to what was promised and, eventually and predictably, expectation adjusts to the stubborn reality and the hype disappears. Eventually the previously hyped technology finds its nieche where it ends up doing quite well, but it never fulfils the world altering promises which were made during the peak of inflated expectations.

Essentially, it looks like this:

Some Examples from previous Hype Cycles

- SQL: In retrospect it seems strange, but SQL was the subject of an early hype cycle. IBM pushed it as being a Structured Query Language (thereafter called SQL) which would enable normal (ie: non-technical) users to query their databases for the data they needed to better understand and support their business processes; no longer would expensive programmers be needed to analyze data but every manager or sales guy could do it himself. At some point reality set in and by the end of the 80s, even IBM had to admit that SQL was too complex for business users and that it required specialized database programmers to be able to generate insightful reports from the raw data stored across many tables or databases. If you've ever seen a complex SQL query doing multiple joins, subqueries and aggregations, you understand that this is not something non-technical users want to deal with.

- CASE Tools: During the 80s everybody was raving about the revolution which would be brought to software engineering by the use of CASE tools. If you’ve never heard of CASE tools, this is probably because they disappeared after their promises went unfulfilled. CASE stands for “Computer Aided Software Engineering”, and it was a set of tools that were expected to simplify the development of software applications by letting non-developers specify the structure and functionality of applications and the code would then be - almost magically - generated by those wonderful tools. The promise was that “we won’t need programmers anymore”. In practice, this was never even close to working the way it was advertised and 30 years later, experienced developers are still a much sought-after resource.

- Object Oriented Programming: as OOP started to seep into the mainstream software engineering market, there was a lot of talk about the immense benefits this new "paradigm" would bring to software engineering: development would be based on reusable components and everything would be radically simpler and faster. There were however issues: first of all, it took developers quite some time to adapt their skills to this new method and many times the promises of code reuse and superior abstraction never materialized. As such, OOP ended up being just another set of tools in the software engineer's toolbox, and the process of writing software, even with the benefits of properly applied OOP, remains a slow and tedious process.

- Java & XML: These somewhat overlapped, but if you read the trade press at the time, Java together with XML was going bring us world peace, cure hunger & poverty, and basically usher in a new golden age. The reality turned out to be a bit more sobering and while writing software in Java is easier than writing it in C, it is still the same fundamental process that we’ve used for the past 50 years; the radical benefits that were promised never materialized because the fundamental process of writing software didn’t change.

- Crypto/Blockchain: Blockchain is the underlying technology for Bitcoin and all other cryptocurrency derivatives. While being a neat concept that does deliver a verifiable (distributed) ledger, for the most part, it seemed (seems?) to be a solution in search of a problem. Yes, there countless industries and processes such a verifiable ledger can be applied to, but just about all of these can be just as well (or even better) served using traditional technology. The main practical benefit seems to have been that it forced the traditional banking sector to innovate: in the EU, you can now do instant money transfers which are credited to the target account within seconds, thus negating one of the chief benefits cryptocurrency promised. There are other use cases such as smart contracts, which in turn allow things like DAOs, NFTs and a bunch of other stuff, but so far, we're still waiting to see whether the financial and/or technological revolution that was promised will actually be realized.

Can AI break the Pattern of previous Hype Cycles?

What is currently broadly called ‘AI’ is actually a specific subset of the Artificial Intelligence field: applications like GPT3 or Midjourney are technically “Deep Learning” technologies, which essentially are (if you don't mind radical simplification) advanced statistical models based on existing knowledge. But lets not get hung up on technical subtelties ... programs like GPT-Chat and Midjourney have obviously unleashed great enthusiasm and gathered millions of users in record time while performing feats which go far beyond what software has previously been able to do. Lets be honest: the ability to describe a scene and have Midjourney render an image which matches the description would have been considerd magic in a previous age. Similarly, the fact that you can ask GPT-Chat a question and get an answer which is (typically) mostly correct, is an ability which has previously been reserved for humans.

So there is a chance, that this time things are different and that history does not have to repeat itself. For the first time in human history, we have created (logical) machines that are capable of self-learning and which seem intelligent because they have the capability to do things which seems straight out of science fiction. There is little doubt that we are at the threshold of a technological revolution and AI has the potential to transform the way in which we work and live. But the true impact that AI will have on our lives is still largely uncertain and it will take a few more years (or decades) until we will start to see and understand the societal change this technology will bring with it. AI is already being used in numerous industries to take on tasks that would otherwise be too costly or time-consuming for humans to do and perform such tasks with the speed and analytical precision that will far outstrip human capabilities. However, it’s important to be realistic about what the current learning models are capable of and what limitations they are subject to.

Problems of current Deep Learning implementations

Deep Learning algorithms are powerful but subject to some fundamental limitations:

- Extreme dependency on training data. There are lots of anecdotes of such systems focussing on tangential (or biased) data which was not recognized as being potentially problematic by their creators.

- While such systems may seem creative, this would be giving them too much credit. A better description of their modus operandi would be to say that they are "remixing" existing content and using their statistical models to predict what shold come next for a given piece of content.

- They don’t have a concept of truth. Since they are based on statistical models, they will happily return data that is (to a human) obviously incorrect as long as it fits their statistical models.

- Furthermore, they don’t care whether what they tell you is true or not; this is not out of malice, but rather because given their internal models, they can’t care.

These issues may well be addressed in future refinements of the currently used deep learning models, but for now, they constitute an important limitation on their utility, one that many people using these models may not be aware of. And while AI technology has probably reached a revolutionary threshold, it’s important to be aware of its current limitations. Can the current euphoria be sustained? If history is a guide, you would be wise to say "most probably not". It may take a while for this realization to materialize, but it probably will happen.

Conclusion

Even if the current AI craze is overhyped, this technology will not disappear. At the very worst, it will be improved and refined and find its use in specialized applications where it may well replace part (or most?) of the human workforce. At the very best, it will enable a greater part of the population to lead creative and meaningful lives, although, given human nature, that may be hoping for too much.

I’m not going to bore you with some fancy-sounding but generic conclusion about having realistic expectations and/or responsible use of these technologies; you can read a trade magazine for that. I just think that right now everything points toward the fact that we're relatively close to the peak of inflated expections phase of the hype cycle and that, if history is a guide, the trough of disillusionment may be just around the proverbial corner.

Disclaimer

I am not an AI researcher or expert. I have however been following current developments in this field for some time and consider myself reasonably well informed. As such, anything I say about this subject should be taken with a grain (if not a bucket) of salt.

Addendum: 2023-03-14

Not to be left behind, Google as announced integration of its generative AI model PaLM (Pathways Language Model) into it's Workspace programs. This means that you'll have access to an AI assistant in GMail and Google Docs. It's still a bit early to evaluate this, but I'd be surprised if Google doesn't manage to offer something of similar quality and capability as GPT3 (and maybe even GPT4). Either way, the AI race is on!

Addendum 2023-03-15

GPT4 was released yesterday. This represents a significant qualitative and quantitative improvement to the model's capabilities delivered in an impressively short timeframe from the release of the GPT3 and GPT3.5 models. Besides the fact the GPT4 is multimodal (ie: it can accept graphical input), initial reports seem to indicate that it is a major step forward both in general capabilitiy as well as in quality. The prose it delivers seems to be more eloquent and less repetitive, it is better at generating programming code and it is able to analyze longer blocks of text. Anybody who's been witness to the exponential improvement of computer hardware over the past four decades (driver by Moore's Law) will find it natural to extrapolate similar advances based on the potential of current AI technology. This is actually a (sort of) scary thought, but such advances are coming, whether we like it or not.

Addendum 2023-03-16

Midjourney version 5 was released yesterday. Initial reports seem to indicate that it's a marked improvement over V4 and that many issues (the hands!!!) which were telltale signs of AI generated images were fixed (or at least substantially improved). Some of the comparative images (and new images) that I've seen, seem to suggest more real (or less synthetic) feel to the generated images. These are just some of the specific improvements which I noticed; there are probably lots of other improvements which will not be apparent from the initial test images people have posted online, but I think it's safe to say that V5 is a major step forward in Midjourney's capabilities. For photographic evidence, the question of "what is real" will become even more urgent than it has already been. For graphical art (paintints, drawings, etc.), time will tell what the value of human-generated (ie: without AI help) will be and how it will evolve.

Updated Conclusion

Right now the hype is strong and is being fuled and sustained by significant updates to the flagship AI products. Are we at (or at least close to) peak hype? I'd say yes, I don't really see how the hype could be much stronger. Regular (ie: non-technical) newspapers magazines are reporting on these latest udpates and right now the possiblities seem limitless. Will we face some sort of disillusionment? Probably yes, although when and to what degree remains to be seen. By the fact is that AI has arrived in force and will not disappear again; we should learn and prepare how to live with the possibilties AI offers, because we're not going to avoid it's impact. Unlike previous tech hypes (see the list above), AI even in it's current form will not be relegated to tech jobs, but rather permeate our societies in ways we can not yet foresee. It's up to us to make the best of it and try to guide its use towards adoption which will result in positive outcome for our societies. Lets for the time being hope and assume that such an arrangement is possible, because the alternative might not be a pretty place.